Performance Requirements

You must complete a sequence of 4 primary tasks:

- Drive along the path to locate the Lego figure

- Detect the figure and move it away from the danger area

- Drop off the figure at one of any of the safe drop zones

- Return the rescue vehicle to the start point and stop

Guidance and Navigation Notes

- The start point is marked with a perpendicular wide line of electrical tape

- There will be a smooth, continuous path outlined with red electrical tape.

- The path follows a concentric profile

- The path leads from the start point to the Lego figure

- There will be several safe drop zones along the path between the start point and Lego figure

- Some drop zones will intersect with the path, some may be adjacent to the path.

- The safe drop zones will be marked with green electrical tape

- The entire Lego-Figure must lay within the outer boundaries of the green tape to be considered a successful rescue

- The start point is also considered a safe drop point

- The entire Lego-Figure must lay within the starting block to be considered a successful rescue

- The Lego figure is located in the center of a bullseye target (danger area) and may be in any configuration the student’s desire

- The bullseye consists of a red circle inside of a blue circle

- The path does not go over any traps (rocks and sand boxes) or obstacles

- There are green trees as vertical markers near the safe drop zones

- The annotated blue “attempt line” indicates where an attempt will be counted if your entire device crosses that line.

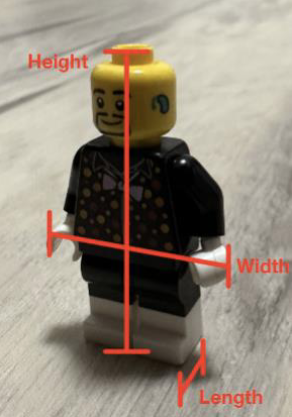

The Lego Man

- L = 9mm

- W = 26mm

- H = 40mm

Sub System Concepts

- We want to use the Raspberry Pi as our main system (higher level logic, vision, and sensor interpretation)

- We want to use the Arduino as our IO middleware (low-level motor/servo commands, reading sensors)

Perceptions Sub Systems

We need to do the following (how many ways can we solve each problem?)

Follow the red line

- Colour / semantic segmentation from line vs everything else

- Simple thresholding

- HSV thresholding to isolate the tape

- Compute the centroid of the red region for action (steering)

- Neural network

- Train a CNN (TensorFlow Lite) to label pixels as “red” or as “background” / other objects

- Simple thresholding

- Canny edge detection

- Detect edges in camera feed and filter by orientation

- Steer to keep line centred

- Use an IR reflectance sensor to detect the line based on the reflective index

- IR sensor underneath robot?

- Use a PID loop to keep sensors aligned over tape

- Use a dedicated colour sensor

- Read colour directly from hardware that already exists Identify the bullseye

- Colour segmentation

- Simple thresholding

- Look for the following colour combination in a circular formation blue→white→red

- Neural network

- The CNN would also identify “bullseye” pixels

- Simple thresholding

- We could use shape-based detection for looking for circles

- Concert circle to greyscale and run a Hough circle transform

- Filter by size ratio to get to the center Lego Man

- Colour-based sensing

- The Lego man is mostly yellow, do a colour threshold HSV to find the lego man and filter out noise

- Build a colour histogram for the lego figure and compare each regions histogram to see if it matches the reference

- Shape-based sensing

- Use a silhouette of a lego mini figure as a template

- Perform contour matching in OpenCV for things in view to the silhouette

- Object detection

- YOLO

- Train a small YOLO model on images of lego figures in different orientations

- Run inference on the Pi and put a bounding box on the lego figure

- Pose estimation

- Use a 2D pose model for detecting the lego man

- YOLO

- Using proximity

- We know the lego man is in the bullseye, once we get to the bullseye, just detect an object using an ultrasonic sensor or something Find a safe zone

- Green zones are concentric or overlapping on the red line

- Color-based detection

- Using HSV thresholding for green, follow the red line until we find a safe zone (find the bounding rectangle)

- Semantic Segmentation

- Add another segmentation class that identifies the “safe zone” pixels

- Shape-based detection

- Find the four corners of the zone which would create a polygon representing the safe zone

- IR / colour sensor

- Detect green take using its reflective index or a dedicated colour sensor Return to the start area

- Shape / colour -based detection

- The starting area is a wider red strip, so we want to backtrack on the red line the same way we got to the bullseye / safe zone until we use edge detection to detect a wider width than normal

- Using Encoders

- We could use our encoders to backtrack if we keep everything well calibrated

Conceptual Designs

Now given different subsystems we can take different solution combinations to form a couple promising solutions

Design A

Easy solution that is reliable independent of camera conditions + good for well lit environments…

- Red line following: IR sensors

- Bullseye identification: Hough circles transform

- Lego man identification: Contour matching / Templating

- Return to start: Follow IR line back to start and detect wide segment of red tape

Design B

Uses more advance and rigorous methods for shape and feature detection, uses a single sensor

- Red line following: Camera colour thresholding

- Bullseye identification: Hough circle transform

- Lego man identification: Proximity

- Return to start: Follow red line back to start and detect wide segment of red tape

Design C

Single consistent approach which would be very robust if well trained, also minimizes separation logic

- Red line following: Semantic segmentation

- Bullseye identification: Semantic segmentation

- Lego man identification: Semantic segmentation

- Return to start: Semantic segmentation

Design D

For things with high contrast this works well, simple detection implementation

- Red line following: Canny edge detection

- Bullseye identification: Color Thresholding

- Lego man identification: Proximity Detection (mechanical) + colour thresholding

- Return to start: Canny edge detection for find the wide start tape

Combination

We could also take a combination approach instead of just choosing one solution to each sub-system…

- Red line following: Canny edge detection for lane detection + colour confirmation

- Bullseye identification: Hough circle transform + contour analysis where we can get position by checking the perceived size vs the real diameter of the circle

- Safe zone detection: Canny edge detection for 4 sided polygons + colour detection for confirmation

- Lego man identification: Colour thresholding (bright yellow thing), we can experiment with feature matching and templating with CV

- Return to start: Basically follow the red line back and find the wide piece of tape that is the start line that is also red

Controls Sub Systems

- PWM?

- PID?

Maybe let Jackie cook the design since I’m less familiar with controls